Generative AI (GenAI) tools like ChatGPT have already become indispensable across organizations worldwide. CEOs are particularly enthusiastic about GenAI’s ability to let employees “do more with less”. According to the McKinsey Global Survey on the State of AI in 2024, 65% of organizations already use GenAI tools extensively, and Gartner forecasts that this number will reach 80% by 2026.

While GenAI platforms may significantly increase productivity, they also introduce new cybersecurity risks that organizations must address. This article discusses the main threats posed by GenAI and reveals how to prevent data leakage via ChatGPT and other GenAI chatbots.

Main cyber threats posed by ChatGPT and other GenAI tools

Does ChatGPT leak your data?

Generative AI platforms allow users to quickly analyze large datasets, troubleshoot software bugs, schedule meetings, generate reports, create relevant content, and perform other routine tasks.

What makes this possible? Tools like OpenAI’s ChatGPT and Google’s Gemini leverage extensive pre-training on diverse text data, fine-tuning with specific examples, and sophisticated algorithms to generate human-like responses based on the input it receives. In simple terms, your input may be used to influence the next request it receives from other users.

If you or your employees aren’t careful, you could enter information that puts your company at risk. This might include personally identifiable information, sensitive corporate data, financial documents, emails, PDFs, and other confidential assets. Data loss where a GenAI chatbot is involved even has its own name: conversational AI leak.

A notable example of a conversational AI leak occurred in the spring of 2023 when Samsung workers unwittingly leaked top-secret data while using ChatGPT to assist with tasks.

What happened

Conversational AI leak at Samsung

The root and consequences

In the spring of 2023, engineers from Samsung’s semiconductor division used a GenAI tool to check confidential source code. They input the proprietary source code into ChatGPT, thus sharing sensitive information with the platform. This resulted in a data leak and widespread media coverage.

Lesson learned

- Never input intellectual property data into chatbots!

Nearly half of all companies worldwide use Microsoft tools as part of their information management structure. However, not all realize that Microsoft’s Copilot AI can access sensitive company data from sources such as a company’s Teams chats, Microsoft 365 apps, SharePoint sites, and OneDrive storage. Copilot AI in turn analyzes all of that data to generate new content solely for that particular company.

Still, like any other software, GenAI tools can have vulnerabilities that may result in unauthorized access to data or systems. One such data leak happened with ChatGPT in 2023.

What happened

Bug in ChatGPT’s redis-py open-source library

The root and consequences

During a nine-hour window on March 20, 2023, it became possible for active ChatGPT+ users to see the billing information of other users when clicking on their own “Manage Subscription” page. They could also see what other subscribers had been using the AI chatbot for. This occurred due to a vulnerability in redis-py, the open-source library that ChatGPT uses.

Lesson learned

- Never input intellectual property data into chatbots!

- Limit access to sensitive data within your systems to prevent accidental exposuse of critical information.

For organizations, conversational AI leaks can result in many negative consequences.

Negative consequences of data leakage via GenAI services

Data leakage via chatbots can lead to numerous negative consequences, the most common of which are as follows:

- Loss of intellectual property. Leakage of proprietary information or trade secrets can result in a loss of competitive advantage, reduced market presence, and revenue loss. In addition, rivals may gain access to your valuable assets and use your technologies to their advantage.

- Operational disruption. Addressing and mitigating a data breach can significantly disrupt business operations, which undoubtedly leads to downtime and a decrease in overall productivity. Organizations might find it necessary to redirect significant resources toward investigation and remediation of the breach, as well as implementation of stronger security measures.

- Reputation damage. Customers and partners might lose trust in a company that suffers a data leakage, which will definitely result in a damaged reputation. Additionally, negative publicity and media coverage can tarnish the brand’s image.

- Compliance violations. Failing to protect data adequately can lead to regulatory and legal consequences. Regulatory bodies are sure to impose sanctions or other legal actions against an organization for non-compliance with data protection laws and regulations, such as GDPR, HIPAA, PCI DSS, CCPA, FCRA, EU AI Act, and others. In addition, affected parties might file lawsuits against the organization for failing to protect their data adequately.

- Financial losses. Non-compliance with data protection regulations may result in significant fines and penalties for organizations. Also, organizations can face direct financial impact due to the loss of intellectual property, liability, fraud, theft, etc.

- Susceptibility to cyberattacks. Any data breach can make an organization a target for future cyberattacks, as bad actors may perceive it as vulnerable. Leaked data can be used for credential-stuffing attacks, which consequently result in unauthorized access to your systems. Cybercriminals can also use leaked data to craft more convincing phishing attacks on your employees.

Organizations can better protect themselves against ChatGPT data leak by understanding these risks and implementing robust security practices.

Top 7 practices to prevent data leakage via GenAI tools

How to prevent data leaking via ChatGPT and other GenAI services?

The following best practices can help you harness the benefits of using GenAI chatbots while protecting your organization’s sensitive data from potential leaks.

1. Create a robust policy for GenAI use

Establishing a clear and comprehensive policy for GenAI use is essential. For this, you need to define and document what is considered “permissible use” of GenAI tools. When bringing the issue to the attention of the executive board, you should:

- Specify which departments or roles are authorized to use GenAI services.

- Outline acceptable and unacceptable use cases.

- Decide whether your employees can use their personal GenAI accounts for work purposes. If not, you may need to create corporate GenAI accounts that are protected with strong passwords.

You should also lay out clear processes for how you’ll monitor adherence to your policies and decide on the consequences of possible violations. Establishing procedures for regular policy reviews and updates is crucial as well.

2. Define which types of data mustn’t be uploaded to GenAI services

To prevent leaking confidential data through ChatGPT and other GenAI tools, write clear policies that outline what data is restricted from being uploaded to chatbots. Identify and communicate sensitive data types that your employees should never share with ChatGPT and other GenAI tools. These types of data can include:

- Personally identifiable information (PII) — Social security numbers, addresses, phone numbers, email addresses, usernames, passwords, and any other information about your employees and customers that can identify them.

- Protected health information (PHI) — Medical records, health insurance details, and other information related to health status or medical care.

- Financial information — Bank account numbers, credit card details, financial statements, and other sensitive financial records.

- Proprietary business information — Trade secrets, proprietary code, creative works, strategic plans, and other confidential corporate information.

Note that these are just some examples of critical info that mustn’t be revealed. Categorize data according to its sensitivity level (e.g. public, internal, confidential, restricted). Also, you may refer to laws like GDPR, HIPAA, or PCI DSS that define specific categories of sensitive data.

3. Perform regular security awareness training

Share all these policies with your employees and educate them on how to prevent data leaks when using ChatGPT. Provide guidelines on creating and maintaining secure accounts for GenAI tool usage, emphasizing the importance of using corporate accounts rather than personal ones whenever possible. If employees still use personal service accounts for GenAI chatbots, educate them on how to secure these accounts with strong passwords and Single Sign-On (SSO).

Highlight that failure to adhere to these policies may result in disciplinary actions, including restricted access to AI tools, formal warnings, and even termination in severe cases.

Talk about the tactics used by cybercriminals to trick people into providing sensitive information. Educate your employees on scam GenAI websites and phishing attacks involving GenAI services. Encourage employees to promptly report to your IT department if GenAI tools deliberately request sensitive information.

Explore the power of Syteca!

Discover how Syteca can help you enhance your organization’s security.

4. Enforce data security measures

Implement robust data security measures, such as encrypting sensitive data and establishing strict user authentication procedures, including multi-factor authentication.

That said, don’t overlook traditional security tools. Deploy Security Information and Event Management (SIEM) systems and data loss prevention (DLP) systems to protect your organization against unauthorized access, data breaches, and injection attacks.

Regularly audit your data security systems to identify and address potential vulnerabilities. It’s also critical to have an incident response plan to quickly and effectively address any data breaches.

5. Follow relevant cybersecurity guidelines

Refer to the relevant cybersecurity frameworks governing AI use and provide guidelines on how to secure your systems while using AI tools. You may choose to stick to:

- The Blueprint for an AI Bill of Rights. This is a framework designed by the White House Office of Science and Technology Policy to guide the development and deployment of AI systems in a manner that protects the rights and freedoms of individuals. While it does not directly regulate AI use in the way that formal legislation or regulations do, it provides a set of principles aimed at ensuring AI technologies are used ethically and responsibly.

- AI Risk Management Framework (AI RMF) by NIST [PDF]. NIST has released a comprehensive framework to help organizations manage the risks associated with AI platforms. In July 2024, NIST also introduced AI RMF: Generative AI Profile [PDF] that can help organizations identify unique risks posed by GenAI specifically. Both frameworks aim to enhance transparency and accountability within organizations that utilize AI technologies, ensuring that AI tools are deployed responsibly.

Request access to Syteca’s online demo!

See how Syteca can protect you against data leakage.

6. Limit access to sensitive data

Safeguard your sensitive data, granting access only to necessary personnel. By minimizing access to your critical resources and continuously verifying access requests, you can significantly reduce the risk of confidential data being leaked via ChatGPT and other GenAI platforms.

Consider adopting the zero trust approach and the principle of least privilege to minimize access points and prevent data breaches, whether accidental or intentional.

7. Implement continuous monitoring

Deploy a monitoring tool to get visibility into GenAI use and what data users input into it. Ideally, your monitoring tool should also be able to send automated alerts for any unusual activities to help you detect and respond to security incidents promptly.

By following these best practices, organizations can minimize the risk of ChatGPT data leaks while leveraging the benefits of GenAI services.

How can Syteca help prevent data leakage via GenAI tools?

Syteca can help your organization make the most of GenAI’s capabilities without risking data security.

Syteca is a powerful insider risk management platform that provides advanced monitoring and access management capabilities to prevent ChatGPT data leakage.

Here are some of the key features that can help you safeguard your sensitive data:

Comprehensive user activity monitoring

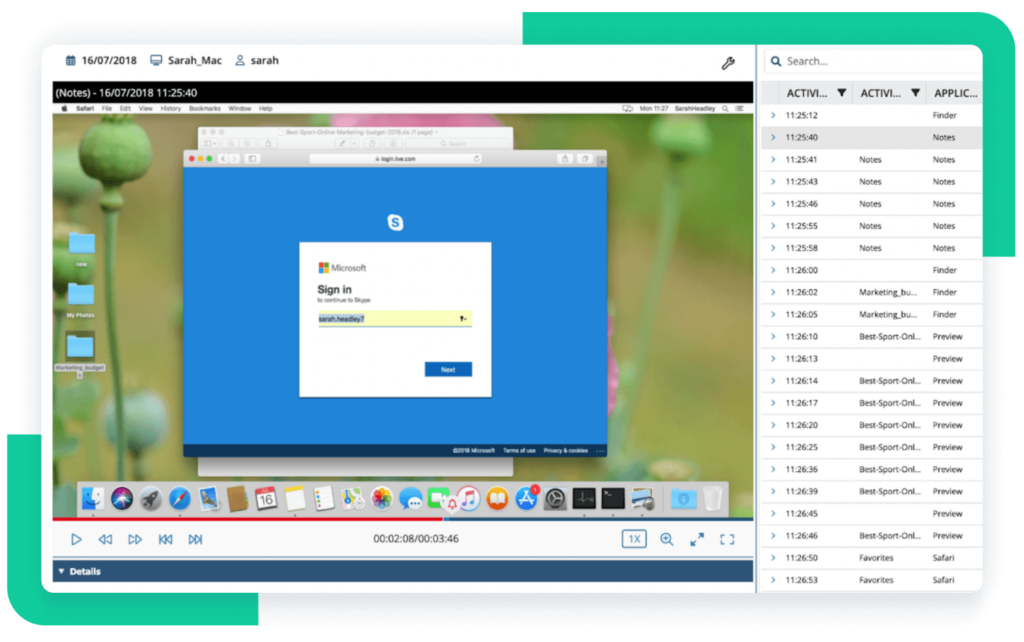

Syteca monitors and records user sessions across various endpoints, including desktops, servers, and virtual environments. This includes capturing all on-screen activities, which can help you view interactions with AI chatbots.

Take note that Syteca logs clipboard activities, which is vital for identifying potential data leakage attempts where sensitive information may be copied and pasted into AI chatbots. Besides clipboard operations, you can monitor:

- File upload operations

- Executed commands (Linux)

- Keystrokes

- Connected USB devices

- Launched applications

- Visited URLs

Alerting and incident response

You can also configure Syteca to send you alerts when users attempt to access ChatGPT (either https://chat.openai.com or https://platform.openai.com) and other GenAI websites that you specify in the management panel.

Once you get such an alert, you can:

- Watch live user sessions to monitor how employees use AI tools and what data they input into chatbots.

- Send a warning message to the user to let them know they’re violating your organization’s data handling guidelines.

- Block the user or immediately terminate the application if their actions are malicious or harmful.

Auditing and reporting

Syteca can help your organization comply with data protection regulations like GDPR, HIPAA, and PCI DSS by providing detailed logs and audit trails of all user activities. In sum, Syteca offers more than 20 types of default and custom reports.

For security audits and incident investigations, the platform provides detailed forensic session records, capturing all relevant activities and events during a user session. Thus, you can trace the steps that led to data leakage, identify risky activities, and implement measures to prevent further incidents.

Access management

With Syteca, you can enforce strict access control policies, ensuring that only the right users can access your sensitive data. The platform lets you:

- Implement fine-grained access controls to specify who can access what data and under what circumstances.

- Grant time-limited permissions to specific users upon request and revoke those permissions immediately after use.

- Restrict access to sensitive data based on the context, such as the user’s role or time of day.

The platform also lets you set two-factor authentication (2FA) for extra protection of corporate accounts.

Integration with other security solutions

Syteca seamlessly integrates with other types of security solutions to provide a comprehensive security ecosystem:

- SIEM. The platform can integrate with Security Information and Event Management (SIEM) systems to compile data from multiple sources and deliver a unified view of security events.

- DLP. Syteca integrates with DLP systems such as Digital Guardian by Fortra [PDF] to provide you with a multi-layered approach to data protection against leakage through AI chatbots and other channels.

Conclusion

As GenAI tools continue to evolve, it’s crucial to stay proactive and vigilant in data protection efforts. By implementing the seven best practices discussed in this article, you can effectively mitigate the risk posed by GenAI and protect your sensitive information.

Syteca can complement these practices by offering robust user activity monitoring, real-time alerting and response, and comprehensive access management capabilities.